Difference between revisions of "Artificial intelligence"

("World computer simulations", predictive scenarios) |

|||

| Line 39: | Line 39: | ||

False positives are of concern, as the algorithms are proprietary: loans, for example were denied to people living in poor neighborhoods based on secret evaluations of a host of private data unknown to the customer of a bank. AI may be used to decide about social control techniques, as the Chinese Social Credit System does, or deny access to public resources, log-ins, or account creation (Google et. al.) as conditioning (aka punishments) bypassing in effect the legal system. AI easily creates an unconcrollable jurisdiction. | False positives are of concern, as the algorithms are proprietary: loans, for example were denied to people living in poor neighborhoods based on secret evaluations of a host of private data unknown to the customer of a bank. AI may be used to decide about social control techniques, as the Chinese Social Credit System does, or deny access to public resources, log-ins, or account creation (Google et. al.) as conditioning (aka punishments) bypassing in effect the legal system. AI easily creates an unconcrollable jurisdiction. | ||

| − | === | + | ===Controlling self-fulfilling prophecies=== |

The complexity of social interactions creates feedback loops of such information management systems - something a programmer would call recursion - which leads to self-similar patterns in thinking (if thinking is based on past memory and experience which it largely does), i.e. repeating the same patterns over and over - hopefully on a smaller scale. The power of this effect can readily be observed in the {{ccm}} which mostly cites from and inside its own network of information channels. Other examples include hysteria, panics, delusion and stock market bubbles/crashes. | The complexity of social interactions creates feedback loops of such information management systems - something a programmer would call recursion - which leads to self-similar patterns in thinking (if thinking is based on past memory and experience which it largely does), i.e. repeating the same patterns over and over - hopefully on a smaller scale. The power of this effect can readily be observed in the {{ccm}} which mostly cites from and inside its own network of information channels. Other examples include hysteria, panics, delusion and stock market bubbles/crashes. | ||

| − | To avoid oscillation from uncontrolled and possibly dangerous feedback loops, diversity, i.e random variations in these channels are necessary. Overuse of AI poses a risk in that these variations are reduced in number and effect; or vicariously abused for purposes of power and control. For example, Blackrock's trading robot (called "Aladin") can move enough money to create (and exploit) monetary bubbles. Researched Google bubbles include the terms "terrorism", "covid" and "miserable failure" - the last returned "G. W. Bush" as first hit while the bubble was in full swing (the system had to be halted to stop skyrocketing). While in [[streisand effect|rare cases funny]], the destructive effects on war, the world economy, personal freedom, public opinion and totalitarism (resulting in "Gleichschaltung") are underestimated. | + | To avoid oscillation from uncontrolled and possibly dangerous feedback loops, diversity, i.e random variations in these channels are necessary. Overuse of AI poses a risk in that these variations are reduced in number and effect; or vicariously abused for purposes of power and control. |

| + | |||

| + | "World computer simulations", predictive scenarios, i.e [[Event201]] and large scale disaster preparation exercises may - unwittingly or not - fall in this category. They may help unfolding and at the same time controlling a self-fulfilling prophecy. | ||

| + | |||

| + | For example, Blackrock's trading robot (called "Aladin") can move enough money to create (and exploit) monetary bubbles. Researched Google bubbles include the terms "terrorism", "covid" and "miserable failure" - the last returned "G. W. Bush" as first hit while the bubble was in full swing (the system had to be halted to stop skyrocketing). While in [[streisand effect|rare cases funny]], the destructive effects on war, the world economy, personal freedom, public opinion and totalitarism (resulting in "Gleichschaltung") are underestimated. | ||

Revision as of 19:34, 10 April 2021

(technology, computer science) | |

|---|---|

| Abbreviation | AI |

| Interest of | • Ajay Agrawal • Sam Altman • Yoshua Bengio • Hans-Christian Boos • Nick Bostrom • Matthew Daniels • Marvin Minsky • Elon Musk • National Security Commission on Artificial Intelligence • Andrew Ng • Peter Norvig • Omar Al Olama • Benjamin Pring • Stuart J. Russell • Lila Tretikov |

| Articial Intelligence is a branch of computer science intended to enable computers to carry out 'intelligent' tasks previously made by human beings. | |

Artificial intelligence (AI) is the branch of computer science which seeks to re-create the "intelligence" of human beings in software.

Contents

Natural language parsing

- Full article: Natural language parsing

- Full article: Natural language parsing

Natural language parsing, i.e. understanding ordinary human language, has long been the holy grail of artificial intelligence, as it offers the chance to communicate with computers as easily as with other people. However, its feasibility (or even possibility) — together with what actually constitutes "intelligence" - remains an open question.

- Full article: Reddit

- Full article: Reddit

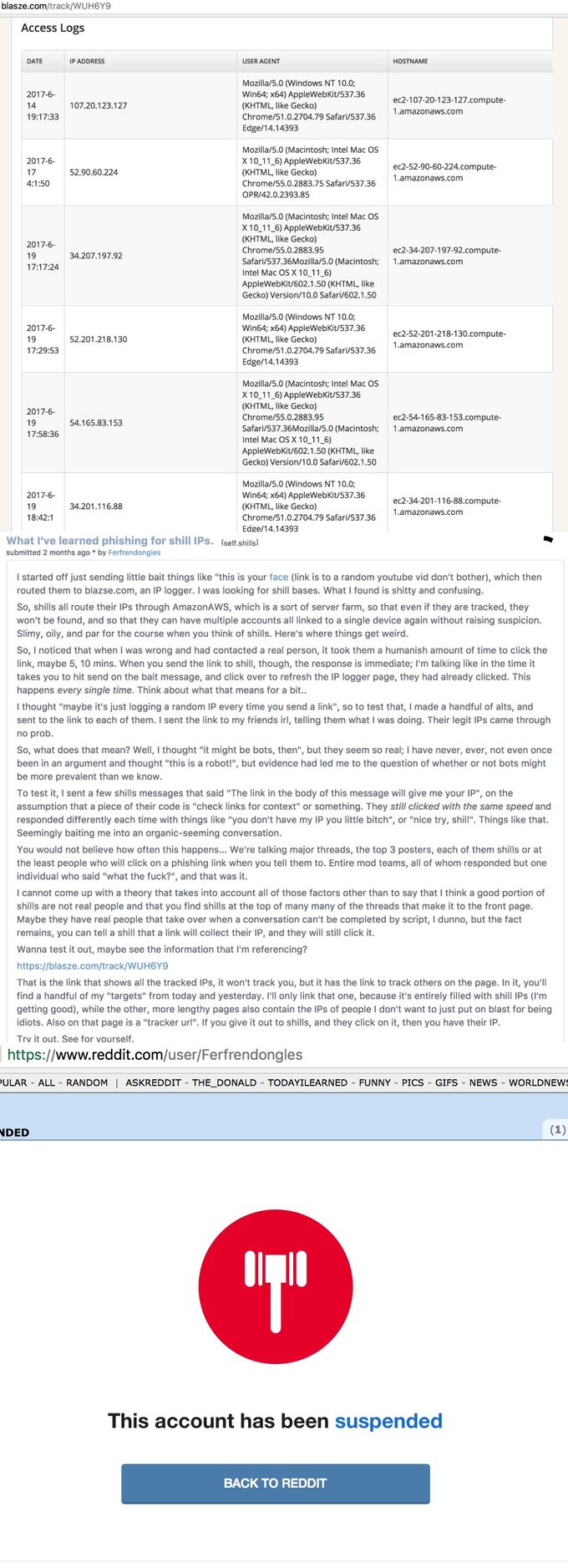

There are reports that by 2017, conversations on major social media platforms are to some extent handled by script/AI activity.[1]

Microsoft Patent

Patents registered by Microsoft in 2021 seem to indicate that the natural language parsing technology has matured so much that it can imitate persons.[2][3] Initial reports about the development of the technology started in late 2016.[4]

Auto-generated biographies and website articles

A number of domains for websites that automatically generate biographies from publicly available data on the Internet have been registered in the 2019 period.[5][6][7][8]

Semantic web

- Full article: Semantic web

- Full article: Semantic web

After creating the World Wide Web, Tim Berners Lee announced that he was interested in a semantic web, that is, a global web of documents that are not human readable, but machine-readable. To this end, the W3C developed the Resource Description Framework (RDF), a data format intended to express meaning, which could in theory be auto-translated into human languages by software. This is used by Wikispooks through the Semantic Mediawiki software which is used on this website. Each page on this site has a small blue RDF icon in the top right hand corner, which presents the page in RDF.

Concerns

Global control grid

- Full article: Global control grid

- Full article: Global control grid

The idea of a global control grid has long been a fantasy of megalomaniac technocrats. As the 21st century progresses, more and more tasks formerly done by human beings are being turned over to software.

Totalitarism

Except for systems expressively designed for openness - like SMW - AI may be used to create what in NAZI Germany was called Gleichschaltung aka conformity (in thinking). Examples include auto-suggesting search terms, "helping" people by removing "clutter" or other content and on the other side proposing "liked" content. Automation often results in limited choices for the computer layman, be it automobile driving or web surfing.

Precedents

Early efforts were made by guessing what next action a user is likely to make (i.e proposing the last action again) or guessing what "problem" he is trying to solve. These - mostly annoying - efforts resulted in "professors" popping up while trying to compose letters (Microsoft Office) or similar help robots; more sophisticated troubles include search and filter bubbles, targeted adds, censorship, automatic user blocking and heuristic (browser) fingerprinting based on AI.

False positives are of concern, as the algorithms are proprietary: loans, for example were denied to people living in poor neighborhoods based on secret evaluations of a host of private data unknown to the customer of a bank. AI may be used to decide about social control techniques, as the Chinese Social Credit System does, or deny access to public resources, log-ins, or account creation (Google et. al.) as conditioning (aka punishments) bypassing in effect the legal system. AI easily creates an unconcrollable jurisdiction.

Controlling self-fulfilling prophecies

The complexity of social interactions creates feedback loops of such information management systems - something a programmer would call recursion - which leads to self-similar patterns in thinking (if thinking is based on past memory and experience which it largely does), i.e. repeating the same patterns over and over - hopefully on a smaller scale. The power of this effect can readily be observed in the commercially-controlled media which mostly cites from and inside its own network of information channels. Other examples include hysteria, panics, delusion and stock market bubbles/crashes.

To avoid oscillation from uncontrolled and possibly dangerous feedback loops, diversity, i.e random variations in these channels are necessary. Overuse of AI poses a risk in that these variations are reduced in number and effect; or vicariously abused for purposes of power and control.

"World computer simulations", predictive scenarios, i.e Event201 and large scale disaster preparation exercises may - unwittingly or not - fall in this category. They may help unfolding and at the same time controlling a self-fulfilling prophecy.

For example, Blackrock's trading robot (called "Aladin") can move enough money to create (and exploit) monetary bubbles. Researched Google bubbles include the terms "terrorism", "covid" and "miserable failure" - the last returned "G. W. Bush" as first hit while the bubble was in full swing (the system had to be halted to stop skyrocketing). While in rare cases funny, the destructive effects on war, the world economy, personal freedom, public opinion and totalitarism (resulting in "Gleichschaltung") are underestimated.

An example

| Page name | Description |

|---|---|

| Algorithm manipulation | Where algorithms on Social media are used in order to promote the Official narrative. |

Related Quotations

| Page | Quote | Author | Date |

|---|---|---|---|

| Sam Altman | “Both publicly and internally, leaders at Microsoft are cheering OpenAI's apparent return to normalcy following days of chaos.

The ChatGPT creator, in which Microsoft has reportedly invested some $13 billion, has been on a roller-coaster ride that began Friday when its board abruptly fired Sam Altman as CEO and ended with his return and the appointment of a new board early Wednesday. Following Altman's ouster, Microsoft swooped in to hire him along with OpenAI co-founder and president Greg Brockman — who quit OpenAI in protest over Altman's termination — to lead a new advanced AI research team at Microsoft, and also offered to hire any other OpenAI employees who wanted to leave. Sam Altman is returning to OpenAI as CEO after his ousting last week, and three board members that participated in his termination have been removed. At that point, Microsoft, already majority owner in OpenAI, was positioned to essentially "acquire" OpenAI by absorbing its talent, after nearly all the startup's 770 or so workers signed a letter saying they would take Microsoft up on the offer unless Altman was reinstated. However, a deal was ultimately reached for Altman to return to OpenAI rather than allowing the $90 billion company to collapse, in what Fortune tech reporter David Meyer wrote is an outcome that "is pretty ideal for Microsoft."” | Fox News Sam Altman Breck Dumas | 2023 |

| Greg Coppola | “I’ve just been coding since I was ten, I have a Ph.D., I have five years of experience at Google, and I just know how algorithms are. They don’t write themselves. We write them to make them do what we want them to do.” | Greg Coppola | July 2019 |

| Microsoft | “Following Altman's ouster, Microsoft swooped in to hire him along with OpenAI co-founder and president Greg Brockman — who quit OpenAI in protest over Altman's termination — to lead a new advanced AI research team at Microsoft, and also offered to hire any other OpenAI employees who wanted to leave.

Sam Altman is returning to OpenAI as CEO after his ousting last week, and three board members that participated in his termination have been removed. At that point, Microsoft, already majority owner in OpenAI, was positioned to essentially "acquire" OpenAI by absorbing its talent, after nearly all the startup's 770 or so workers signed a letter saying they would take Microsoft up on the offer unless Altman was reinstated. However, a deal was ultimately reached for Altman to return to OpenAI rather than allowing the $90 billion company to collapse, in what Fortune tech reporter David Meyer wrote is an outcome that "is pretty ideal for Microsoft."” | Breck Dumas | 2023 |

Related Document

| Title | Type | Publication date | Author(s) | Description |

|---|---|---|---|---|

| Document:Off the Leash: How the UK is developing the technology to build armed autonomous drones | Article | 10 November 2018 | Peter Burt | The United Kingdom should make an unequivocal statement that it is unacceptable for machines to control, determine, or decide upon the application of force in armed conflict and give a binding political commitment that the UK would never use fully autonomous weapon systems |

References

- ↑

- ↑ https://www.forbes.com/sites/barrycollins/2021/01/04/microsoft-could-bring-you-back-from-the-dead-as-a-chat-bot/

- ↑ https://www.myce.com/news/microsoft-patent-to-bring-the-dead-back-to-life-via-chatbot-95784/

- ↑ https://www.theguardian.com/technology/shortcuts/2016/oct/11/chatbot-talk-to-dead-grief

- ↑ [Whois Record for WikiAge.org - Created on 2019-05-07] http://archive.today/2021.04.09-223919/https://www.b.wikiage.org/wife-and-family-details-to-know/

- ↑ [Whois Record for XYZ.ng - Created on 2019-06-11] http://archive.today/2021.04.09-224328/https://www.xyz.ng/en/wiki/who-is-dr-reiner-fuellmich-insight-on-his-wikipedia-wife-and-family-1046990

- ↑ [Whois Record for TheArtsOfEn...ainment.com - ASN: United States Of America AS13335 CLOUDFLARENET, US (registered Jul 14, 2010)] http://archive.today/2021.04.09-224403/https://www.theartsofentertainment.com/who-is-dr-reiner-fuellmich-all-you-need-to-know-about-dr-reiner-fuellmich-family/

- ↑ [Whois Record for CelebPie.com - Created on 2019-08-15] http://archive.today/2021.04.09-224448/https://celebpie.com/dr-reiner-fuellmich-wife-family/